SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations

Chenlin Meng

Yutong He

Yang Song

Jiaming Song

Jiajun Wu

Jun-Yan Zhu

Stefano Ermon

Stanford University Carnegie Mellon University

In ICLR 2022

Paper | GitHub | Colab

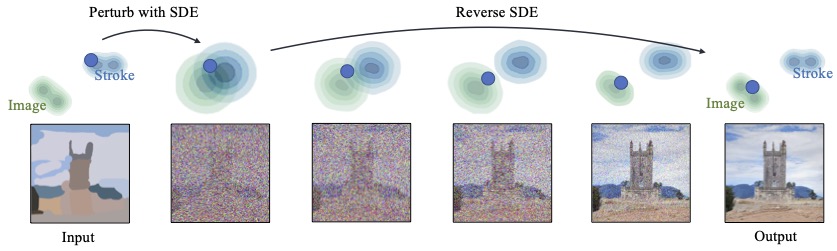

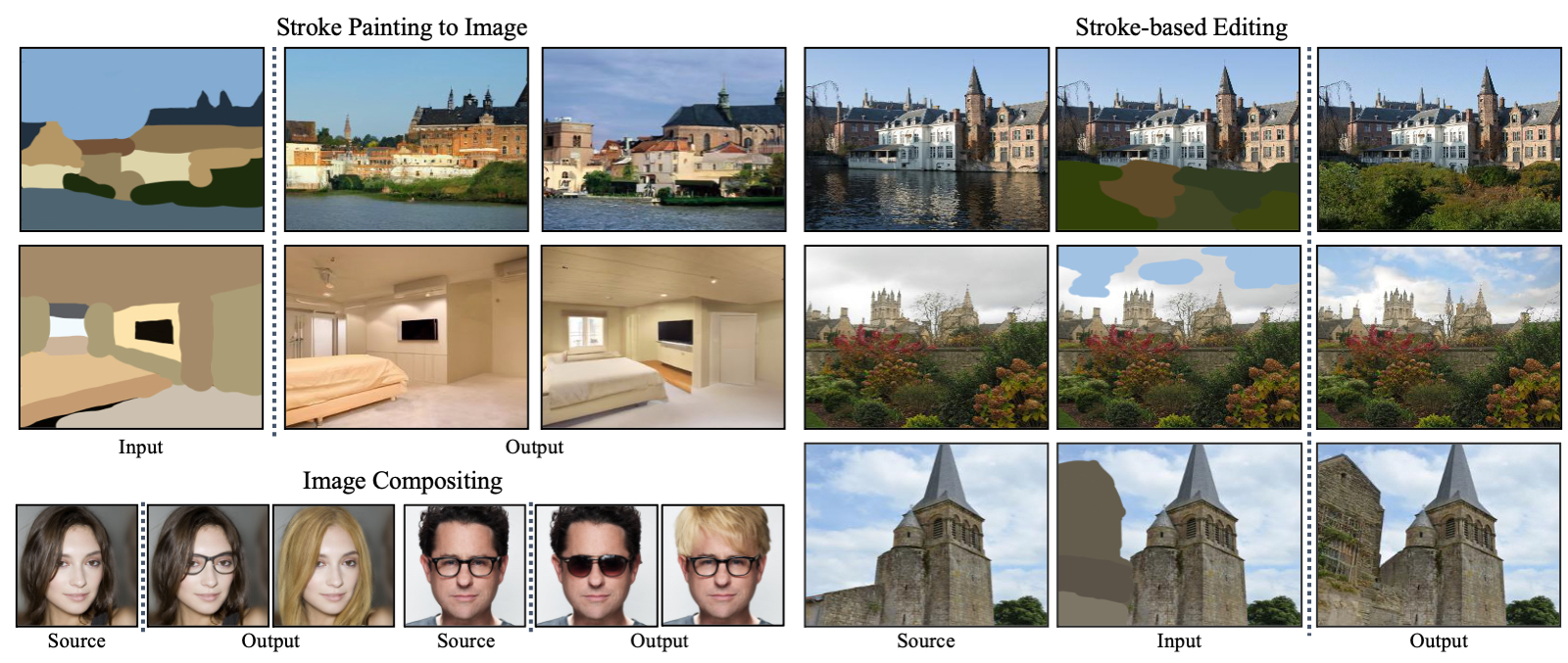

SDEdit is an image synthesis and editing framework based on stochastic differential equations (SDEs). SDEdit allows stroke-based image synthesis, stroke-based image editing and image compositing without task specific optimization.

Abstract

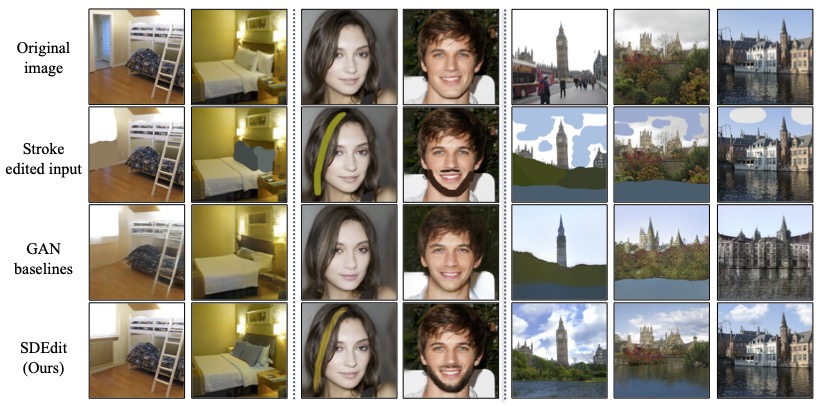

Guided image synthesis enables everyday users to create and edit photo-realistic images with minimum effort. The key challenge is balancing faithfulness to the user input (e.g., hand-drawn colored strokes) and realism of the synthesized image. Existing GAN-based methods attempt to achieve such balance using either conditional GANs or GAN inversions, which are challenging and often require additional training data or loss functions for individual applications. To address these issues, we introduce a new image synthesis and editing method, Stochastic Differential Editing (SDEdit), based on a diffusion model generative prior, which synthesizes realistic images by iteratively denoising through a stochastic differential equation (SDE). Given an input image with user guide of any type, SDEdit first adds noise to the input, then subsequently denoises the resulting image through the SDE prior to increase its realism. SDEdit does not require task-specific training or inversions and can naturally achieve the balance between realism and faithfulness. SDEdit significantly outperforms state-of-the-art GAN-based methods by up to 98.09% on realism and 91.72% on overall satisfaction scores, according to a human perception study, on multiple tasks, including stroke-based image synthesis and editing as well as image compositing.

Paper

arXiv 2108.01073, 2021.

Citation

Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu and Stefano Ermon. "SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations". In International Conference on Learning Representations (ICLR) 2022.

Bibtex

Introducing SDEdit: a powerful image synthesis and editing technique

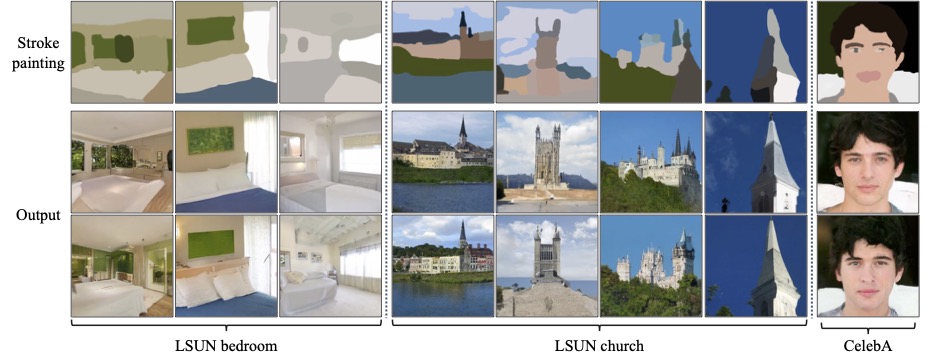

Synthesizing images from strokes with SDEdit

Scribble-based image editing with SDEdit

Image compositing with SDEdit